Yesterday I attended the NJ School Board Association's conference, "AI: Preparing for Today and Tomorrow," where education leaders across New Jersey gathered to discuss one of the most pressing questions facing schools today: how do we implement AI responsibly? The conference brought together administrators, board members, technology coordinators and students to share strategies, concerns, and real-world experiences.

What emerged was a complex picture of opportunity and challenge, from practical classroom applications to infrastructure demands to federal policy contradictions that could reshape how local districts govern AI use.

Opening Keynote: From Fear to Fascination

The day opened with Dr. Christopher Nagy and the MagicEdX team addressing what they called the journey "From Fear to Fascination." Their keynote focused on dispelling AI myths while demonstrating how AI can personalize learning, enhance student agency, and streamline administrative tasks.

The MagicEdX platform that was shown is an example of practical AI implementation, using AI to match students' career interests with local workplace learning opportunities, even providing transportation options for students without cars. But beyond the technology demonstration, the keynote's value was in reframing AI from a threat to a tool. As one presenter put it: AI is about "choosing correctly," it's literally artificial intelligence as a "human-made tool for making informed choices."

Mid-Morning: Understanding AI's Promise and Pitfalls

Dr. Christopher Tienken from Seton Hall University led a session examining both the potential and concerns around large language model AI in classroom instruction. His focus on educational equity was particularly important, exploring how AI might address learning barriers while acknowledging the risks of widening digital divides.

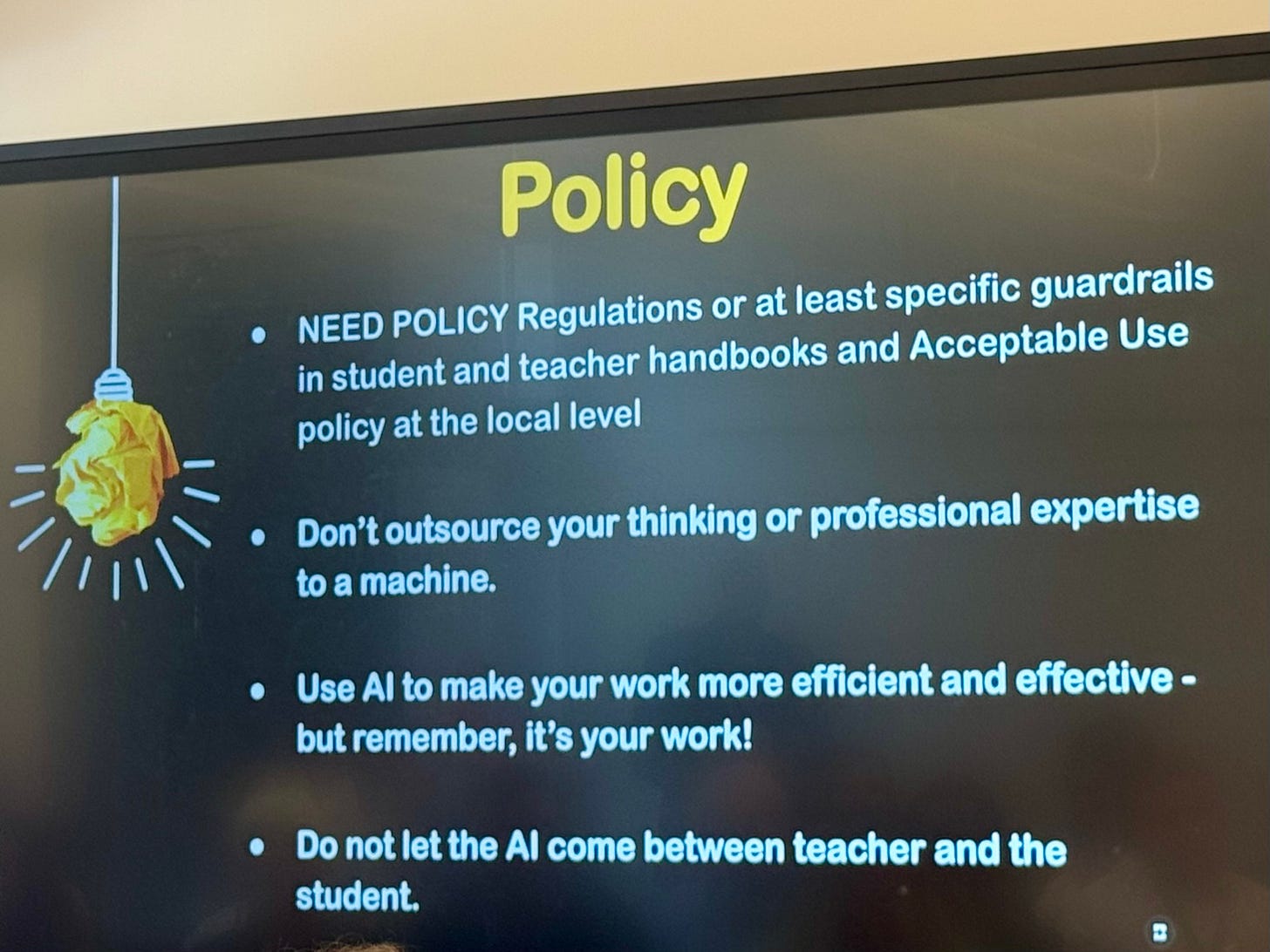

The session also discussed board policy implications, emphasizing that districts need governance structures before widespread implementation. This theme would resurface throughout the day: the tension between AI's rapid evolution and the need for thoughtful, deliberate education policy development.

The Hidden Infrastructure Challenge

The session I attended after was on energy infrastructure, which provided a sobering reality check I already knew: AI implementation isn't just about software. It demands fundamental infrastructure changes.

AI-capable data centers need 5-10 times more electricity than traditional server rooms, plus sophisticated cooling, backup power, and increased bandwidth. For districts already struggling with technology budgets, this creates new challenges around shared services, solar with battery storage, or microgrids.

The key insight: this isn't just about adding capacity, it's about building resilience. As schools become increasingly technology-dependent, power outages essentially "put you out of business." AI amplifies this vulnerability.

Afternoon Deep Dive: East Brunswick's Strategic Approach

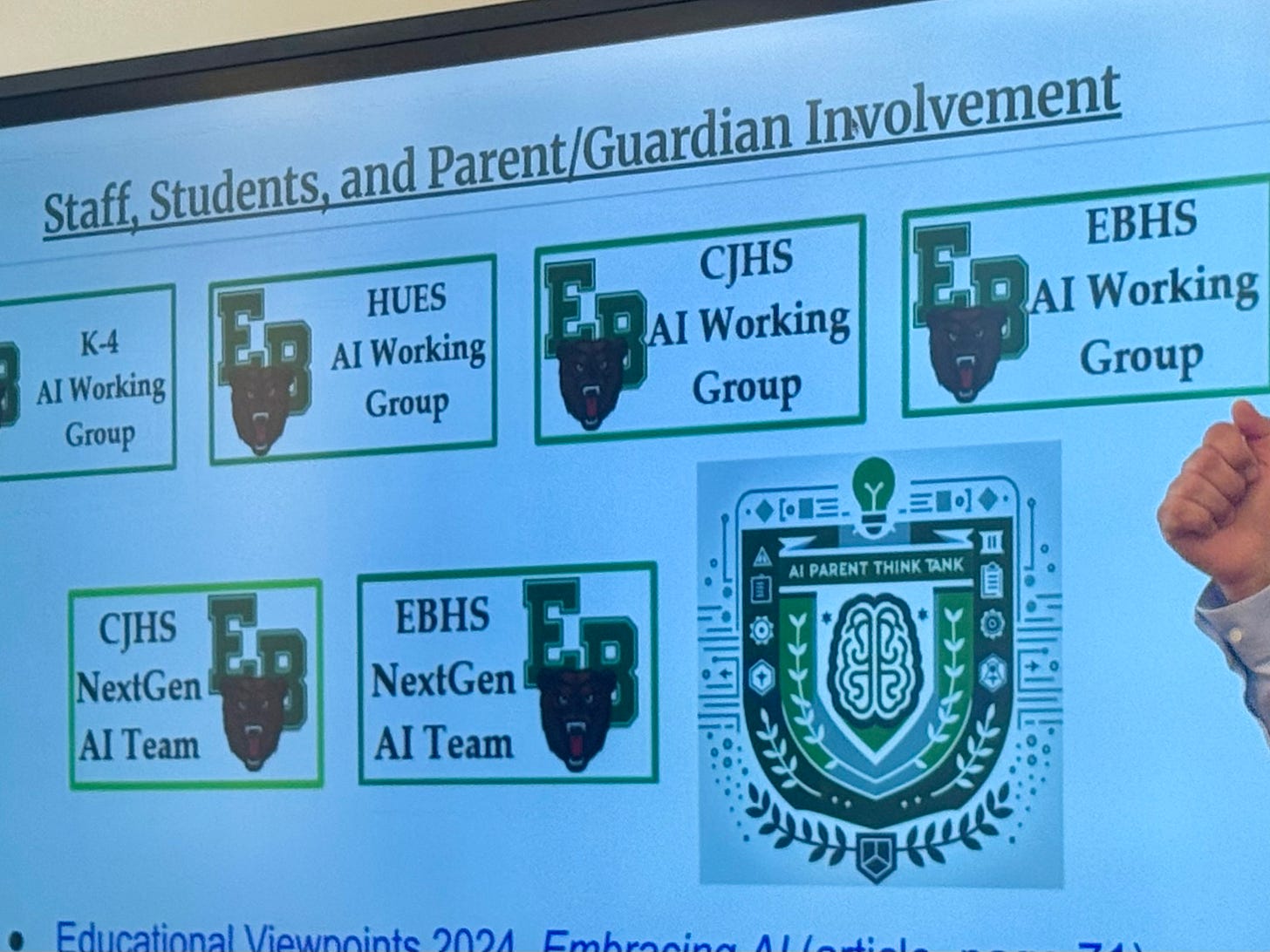

After lunch, I attended Herb Peluzzo's session where he presented East Brunswick Public Schools' comprehensive district AI strategy development process. His session, "From Vision to Reality," provided a concrete roadmap for moving beyond individual teacher experiments to district-wide implementation.

East Brunswick's approach emphasized stakeholder involvement and transparency, creating AI working groups that included teachers across subjects, students at both junior high and high school levels, and parent committees. This approach is very smart as it involves not just school leaders, but also teachers, students, and parents, all important stakeholders. They developed what they call "AI Guidelines" rather than rigid policies, recognizing that the technology evolves too quickly for traditional policy development timelines.

Their graduated implementation strategy varies by grade level:

K-4: Teacher-facing only. AI as a tool for educators to create differentiated materials, level texts, and generate assessment questions. Students get AI literacy awareness but no direct access.

5-6: Highly supervised introduction. Limited student access with heavy teacher oversight, primarily in computer electives and controlled environments.

7-12: Structured access with guidelines. Clear policies about appropriate use, citation requirements, and academic integrity expectations.

Peluzzo's key insight: start with guidelines, not policies. "Even imperfect guardrails are better than no guardrails," he noted. This allows districts to provide structure while maintaining flexibility as AI capabilities evolve. I think it is smart to develop guidelines, as developing policy takes time and is also very rigid, making it difficult to change. Being able to change is important, especially with how rapidly AI is developing.

This graduated approach makes sense developmentally, but it raises critical questions. Should we be limiting AI tools to 5th grade and above (what if younger students are already using AI)? How do we balance introducing AI literacy without overwhelming younger students?

Closing Panel: What Students Actually Think

The day concluded with the perspective we needed most: students themselves. Four seniors from Nottingham High School in Hamilton took the stage for a moderated discussion about their AI experiences and recommendations. These students cut through the adult speculation with startling clarity. These weren't naive teenagers stumbling through technology, they were digital natives who understood AI's potential for both learning and cheating, and they had specific frustrations with how adults were handling it.

"Teachers tell us we can't use AI, but then they use it themselves," one student observed. This wasn't rebellious complaining, it was a call for consistency and transparency that should make every educator uncomfortable.

The students themselves are very wary about using AI in general. They understand the potential for misuse and are cautious about applications like essay editing help. They also expressed concerns about people viewing AI in overly humanistic ways, emphasizing the importance of understanding that AI is not a person.

When I asked what support would have been most helpful during their high school experience, their answer was immediate: an AI literacy course. Not prohibition. Not fear-mongering. Education.

Most tellingly, when asked how AI should be implemented in schools, they advocated for integration across all classes rather than treating it as a separate subject. It's important that AI skills are embedded within existing curricula rather than creating isolated "AI classes" separate from AI literacy education.

The students also demonstrated sophisticated awareness of AI's darker applications, specifically requesting education about deepfakes and why they're problematic. They're not asking to be protected from AI, they're asking to be educated about it.

The Policy Paradox: Federal Signals vs. Local Needs

Here's where things get complicated, and where both state Departments of Education and local school boards need to pay attention.

Trump's Executive Order promotes "Advancing AI Education for American Youth," promising funding for AI literacy programs, workforce preparation, and educational technology integration. The order specifically encourages AI adoption in schools through sections focused on training, challenges, and competitive programs.

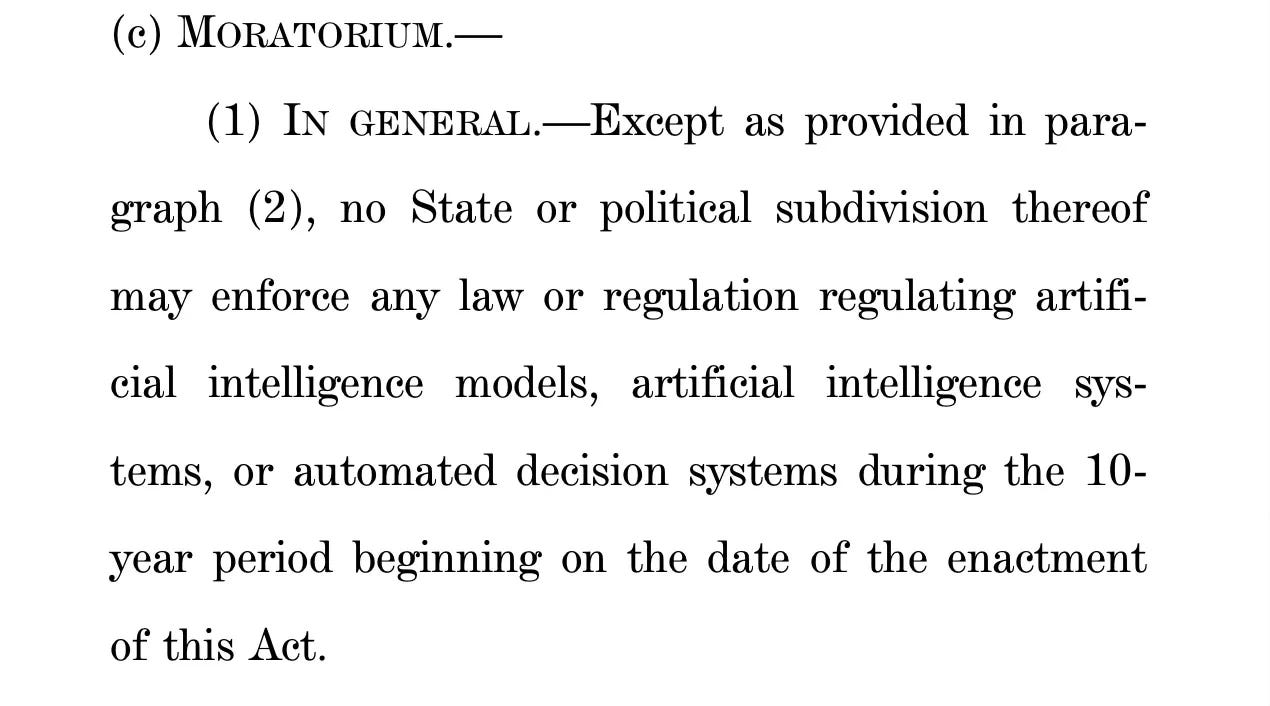

But buried in HR 1 budget bill is a provision that creates a 10-year moratorium on AI regulation at state and local levels. This means states and school districts can't create their own AI oversight rules, they're restricted from developing the very governance structures they need to implement AI responsibly.

This creates a policy paradox: the federal government is simultaneously encouraging AI adoption in schools while preventing state and local communities from creating the oversight mechanisms necessary to do it safely if the budget bill is passed.

The Questions We Should Be Asking

The conference left me with more questions than answers, which might be the point. Here are the critical issues every school district should be grappling with:

Policy and Implementation:

Should AI tools be limited to 5th grade and above, or are younger students ready for earlier introduction?

How do we balance the Trump administration's AI education executive order with the Trump administration's budget bill that includes a 10-year regulatory moratorium on AI?

How do schools implement a guideline approach before a policy approach?

Educational Practice:

How do we address the hypocrisy students identified, adults and teachers using AI while prohibiting student access?

What would effective AI literacy curriculum look like across grade levels?

How do schools implement AI into all curriculums?

How do we prepare students for deepfakes and synthetic media without creating fear?

Infrastructure and Equity:

How do districts fund the infrastructure requirements for meaningful AI implementation?

What happens to schools that can't afford AI infrastructure; does this create new digital divides?

Should districts share AI resources regionally to reduce individual costs?

What Students Are Teaching Us

The most powerful insight from the conference came from those four seniors: students want education, not prohibition.

They're already encountering AI in their daily lives, from Google searches that include AI summaries to Snapchat's virtual chatbot called "My AI" and features like AI Snaps. Pretending they can avoid AI is like pretending they can avoid the internet.

Instead, they're asking for:

Transparency about when and how teachers use AI

Consistency in policies that apply to both students and educators

Education about both AI's capabilities and limitations

Integration that makes AI literacy part of every subject, not an isolated skill

They understand that AI isn't a person, but they want formal education about what it actually is. They know about cheating potential, but they also see legitimate applications for learning.

Most importantly, they're thinking about implications adults miss, like the need to understand deepfakes and synthetic media in an age of increasing misinformation.

The Path Forward

Every school board should be having conversations about AI implementation now, not waiting for perfect policies or complete funding. The technology is advancing too quickly, and students are too sophisticated to benefit from delayed action.

Start with these immediate steps:

Survey your stakeholders - What do your students, teachers, and parents actually know about AI?

Assess your guidelines - Do you have any AI guidelines or policies, or are teachers making individual decisions?

Understand federal implications - How might HR 1's moratorium affect your local policy development?

Engage students as partners - They're the ones living with these decisions. Include them in the planning.

The conference made one thing clear: AI in education isn't coming, it's here. The question isn't whether to implement AI, but how to do it thoughtfully, equitably, and with the input of the students whose education we're trying to improve.

Those four seniors on the panel understood something many adults are still learning: AI is a tool, and like any tool, it can be used well or poorly. Our job isn't to ban the hammer (a metaphor used during the opening), it's to teach proper construction techniques.

This post was written by me, with editing support from AI tools, because even writers appreciate a sidekick.